Over the course of 2022 and early 2023, tech innovators unleashed generative AI en masse, dazzling business leaders, investors, and society at large with the technology’s ability to create entirely new and seemingly human-made text and images.

In just five days, one million users flocked to ChatGPT, OpenAI’s generative AI language model that creates original content in response to user prompts. It took Apple more than two months to reach the same level of adoption for its iPhone. Facebook had to wait ten months and Netflix more than three years to build the same user base.

And ChatGPT isn’t alone in the generative AI industry. Stability AI’s Stable Diffusion, which can generate images based on text descriptions, garnered more than 30,000 stars on GitHub within 90 days of its release—eight times faster than any previous package.

This flurry of excitement isn’t just organizations kicking the tires. Generative AI use cases are already taking flight across industries. Financial services giant Morgan Stanley is testing the technology to help its financial advisers better leverage insights from the firm’s more than 100,000 research reports. The government of Iceland has partnered with OpenAI in its efforts to preserve the endangered Icelandic language. Salesforce has integrated the technology into its popular customer-relationship-management (CRM) platform.

The breakneck pace at which generative AI technology is evolving and new use cases are coming to market has left investors and business leaders scrambling to understand the generative AI ecosystem.

A brief explanation of generative AI

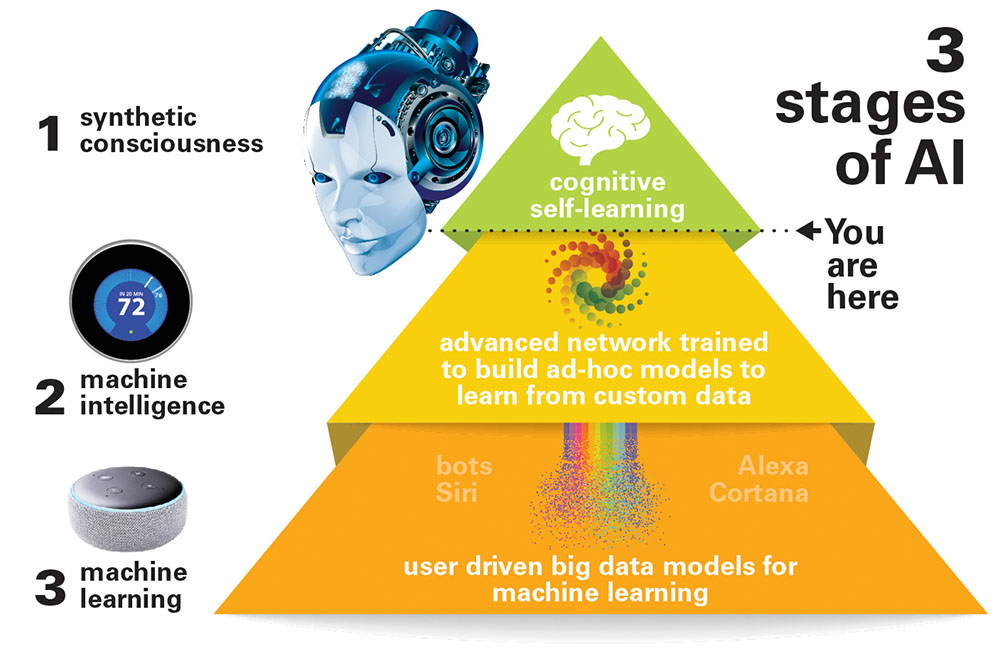

To understand the generative AI value chain, it’s helpful to have a basic knowledge of what generative AI is and how its capabilities differ from the “traditional” AI technologies that companies use to, for example, predict client churn, forecast product demand, and make next-best-product recommendations.

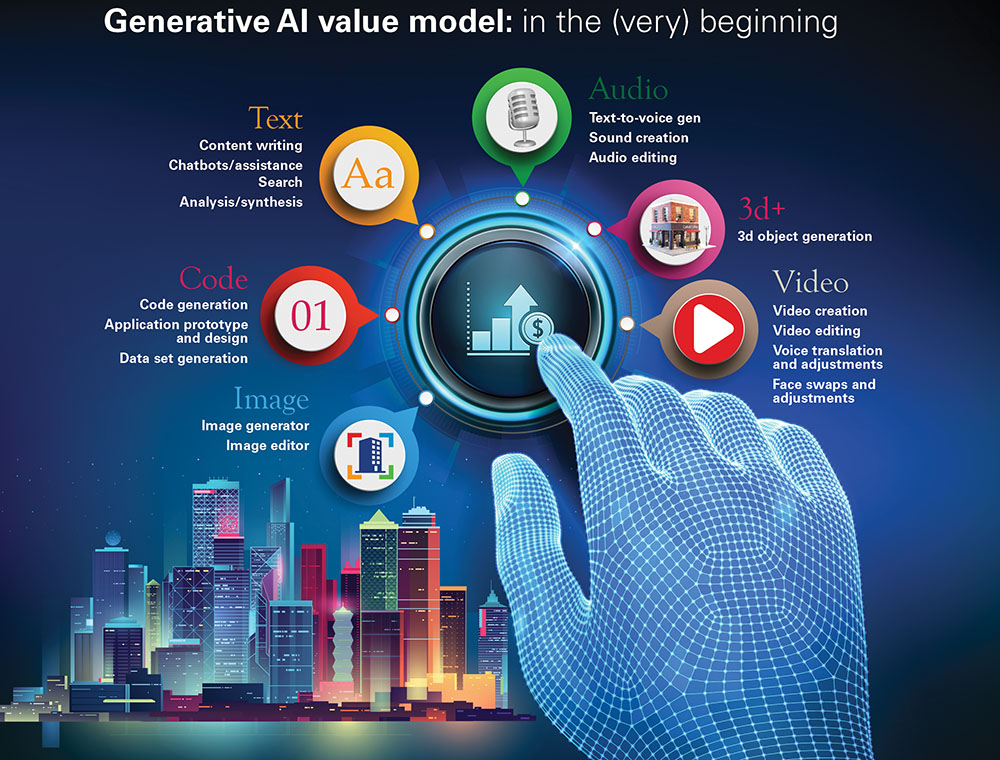

A key difference is its ability to create new content. This content can be delivered in multiple modalities including text (articles or answers to questions), images that look like photos or paintings, videos, and 3-D representations (scenes, landscapes for video games).

Even in these early days of the technology’s development, generative AI outputs have been jaw-droppingly impressive, winning digital-art awards and scoring among or close to the top 10 percent of test takers in numerous tests, including the U.S. bar exam for lawyers and the math, reading, and writing portions of the SATs, a college entrance exam used in the U.S.

Most generative AI models produce content in one format, but multimodal models that can, for example, create a slide or web page with both text and graphics based on a user prompt are also emerging.

All of this is made possible by training neural networks (a type of deep learning algorithm) on enormous volumes of data and applying “attention mechanisms,” a technique that helps AI models understand on what to focus. With these mechanisms, a generative AI system can identify word patterns, relationships, and the context of a user’s prompt (for instance, understanding that “blue” in the sentence “The cat sat on the mat, which was blue” represents the color of the mat and not of the cat).

Traditional AI also might use neural networks and attention mechanisms, but these models aren’t designed to create new content. They can only describe, predict, or prescribe something based on existing content.

As the development and deployment of generative AI systems gets under way, a new value chain is emerging to support the training and use of this powerful technology. At a glance, one might think it’s quite similar to a traditional AI value chain. After all, of the six top-level categories—computer hardware, cloud platforms, foundation models, model hubs and machine learning operations, applications, and services—only foundation models are a new addition.

However, a deeper look reveals some significant differences in market opportunities. To begin, the underpinnings of generative AI systems are appreciably more complex than most traditional AI systems. Accordingly, the time, cost, and expertise associated with delivering them give rise to significant headwinds for new entrants and small companies across much of the value chain. While pockets of value exist throughout, our research suggests that many areas will continue to be dominated by tech giants and incumbents for the foreseeable future.

The generative AI application market is the section of the value chain expected to expand most rapidly and offer significant value-creation opportunities to both incumbent tech companies and new market entrants. Companies that use specialized or proprietary data to fine-tune applications can achieve a significant competitive advantage over those that don’t.

Computer hardware

Generative AI systems need knowledge—and lots of it—to create content. OpenAI’s GPT-3, the generative AI model underpinning ChatGPT, for example, was trained on about 45 terabytes of text data (akin to nearly one million feet of bookshelf space).

It’s not something traditional computer hardware can handle. These types of workloads require large clusters of graphic processing units (GPUs) or tensor processing units (TPUs) with specialized accelerator chips capable of processing all that data across billions of parameters in parallel.

Once training of this foundational generative AI model is completed, businesses may also use such clusters to customize the models (a process called “tuning”) and run these power-hungry models within their applications. However, compared with the initial training, these latter steps require much less computational power.

While there are a few smaller players in the mix, the design and production of these specialized AI processors is concentrated. NVIDIA and Google dominate the chip design market, and one player, Taiwan Semiconductor Manufacturing Company Limited (TSMC), produces almost all of the accelerator chips. New market entrants face high start-up costs for research and development. Traditional hardware designers must develop the specialized skills, knowledge, and computational capabilities necessary to serve the generative AI market.

Cloud platforms

GPUs and TPUs are expensive and scarce, making it difficult and not cost-effective for most businesses to acquire and maintain this vital hardware platform on-premises. As a result, much of the work to build, tune, and run large AI models occurs in the cloud. This enables companies to easily access computational power and manage their spend as needed.

Unsurprisingly, the major cloud providers have the most comprehensive platforms for running generative AI workloads and preferential access to the hardware and chips. Specialized cloud challengers could gain market share, but not in the near future and not without support from a large enterprise seeking to reduce its dependence on hyperscalers.

Foundational models

At the heart of generative AI are foundation models. These large deep learning models are pretrained to create a particular type of content and can be adapted to support a wide range of tasks. A foundation model is like a Swiss Army knife—it can be used for multiple purposes. Once the foundation model is developed, anyone can build an application on top of it to leverage its content-creation capabilities. Consider OpenAI’s GPT-3 and GPT-4, foundation models that can produce human-quality text. They power dozens of applications, from the much-talked-about chatbot ChatGPT to software-as-a-service (SaaS) content generators Jasper and Copy.ai.

Foundation models are trained on massive data sets. This may include public data scraped from Wikipedia, government sites, social media, and books, as well as private data from large databases. OpenAI, for example, partnered with Shutterstock to train its image model on Shutterstock’s proprietary images.

Developing foundation models requires deep expertise in several areas. These include preparing the data, selecting the model architecture that can create the targeted output, training the model, and then tuning the model to improve output (which entails labeling the quality of the model’s output and feeding it back into the model so it can learn).

Today, training foundation models in particular comes at a steep price, given the repetitive nature of the process and the substantial computational resources required to support it. In the beginning of the training process, the model typically produces random results. To improve its next output so it is more in line with what is expected, the training algorithm adjusts the weights of the underlying neural network. It may need to do this millions of times to get to the desired level of accuracy. Currently, such training efforts can cost millions of dollars and take months.

Training OpenAI’s GPT-3, for example, is estimated to cost $4 million to $12 million. As a result, the market is currently dominated by a few tech giants and start-ups backed by significant investment. However, there is work in progress toward making smaller models that can deliver effective results for some tasks and training that is more efficient, which could eventually open the market to more entrants.

Who benefits?

While generative AI will likely affect most business functions over the longer term, our research suggests that information technology, marketing and sales, customer service, and product development are most ripe for the first wave of applications.

Information technology. Generative AI can help teams write code and documentation. Already, automated coders on the market have improved developer productivity by more than 50 percent, helping to accelerate software development.

Marketing and sales. Teams can use generative AI applications to create content for customer outreach. Within two years, 30 percent of all outbound marketing messages are expected to be developed with generative AI systems.

Customer service. Natural-sounding, personalized chatbots and virtual assistants can handle customer inquiries, recommend swift resolution, and guide customers to the information they need. Companies such as Salesforce, Dialpad, and Ada have already announced offerings in this area.

Product development. Companies can use generative AI to rapidly prototype product designs. Life sciences companies, for instance, have already started to explore the use of generative AI to help generate sequences of amino acids and DNA nucleotides to shorten the drug design phase from months to weeks.

In the near term, some industries can leverage these applications to greater effect than others. The media and entertainment industry can become more efficient by using generative AI to produce unique content (for example, localizing movies without the need for hours of human translation) and rapidly develop ideas for new content and visual effects for video games, music, movie story lines, and news articles. Banking, consumer, telecommunications, life sciences, and technology companies are expected to experience outsize operational efficiencies given their considerable investments in IT, customer service, marketing and sales, and product development.

Services

Existing AI service providers are expected to evolve their capabilities to serve the generative AI market. Niche players may also enter the market with specialized knowledge for applying generative AI within a specific function (such as how to apply generative AI to customer service workflows), industry (for instance, guiding pharmaceutical companies on the use of generative AI for drug discovery), or capability (such as how to build effective feedback loops in different contexts).

While generative AI technology and its supporting ecosystem are still evolving, it is already quite clear that applications offer the most significant value-creation opportunities. Those who can harness niche—or, even better, proprietary—data in fine-tuning foundation models for their applications can expect to achieve the greatest differentiation and competitive advantage. The race has already begun, as evidenced by the steady stream of announcements from software providers—both existing and new market entrants—bringing new solutions to market.

Excerpt Tobias Härlin, Gardar Björnsson Rova, Alex Singla, Alex Sukharevsky McKinsey