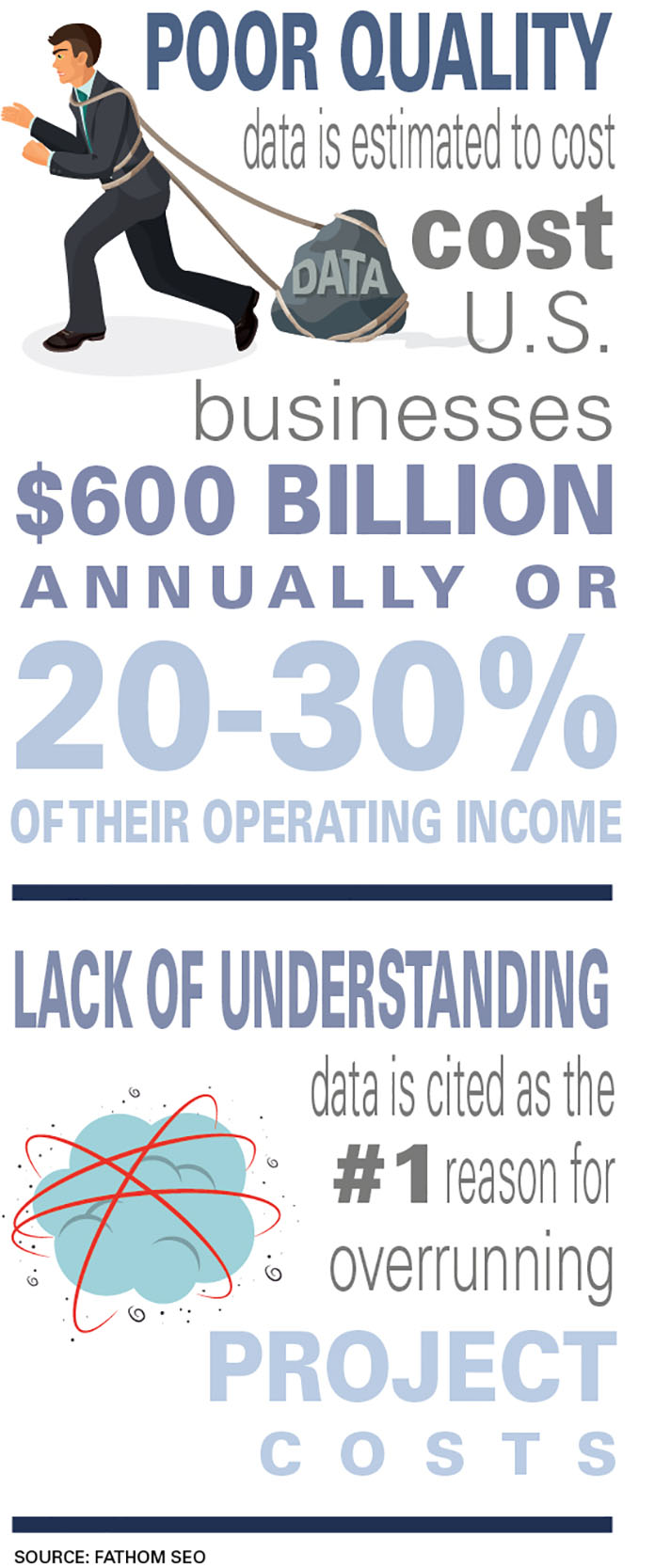

Poor data quality is enemy number one to the widespread, profitable use of machine learning. While the caustic observation, “garbage-in, garbage-out” has plagued analytics and decision-making for generations, it carries a special warning for machine learning.

The quality demands of machine learning are steep, and bad data can rear its ugly head twice—first in the historical data used to train the predictive model and second in the new data used by that model to make future decisions.

To properly train a predictive model, historical data must meet exceptionally broad and high-quality standards. First, the data must be right: It must be correct, properly labeled, de-duped, and so forth. But you must also have the right data—lots of unbiased data, over the entire range of inputs for which you aim to develop the predictive model. Most data quality work focuses on one criterion or the other, but for machine learning, you must work on both simultaneously.

Yet today, most data fails to meet basic “data are right” standards. Reasons range from data creators not understanding what is expected, to poorly calibrated measurement gear, to overly complex processes, to human error.

To compensate, data scientists cleanse the data before training the predictive model. It is time-consuming, tedious work (taking up to 80 percent of data scientists’ time), and it’s the problem data scientists complain about most.

Even with such efforts, cleaning neither detects nor corrects all the errors, and as yet, there is no way to understand the impact on the predictive model. What’s more, data does not always meets “the right data” standards, as reports of bias in facial recognition and criminal justice attest.

The problem with quality data

Increasingly-complex problems demand not just more data, but more diverse, comprehensive data. And with this comes more quality problems. For example, handwritten notes and local acronyms have complicated IBM’s efforts to apply machine learning (e.g., Watson) to cancer treatment.

Data quality is no less troublesome in implementation. Consider an organization seeking productivity gains with its machine learning program. While the data science team that developed the predictive model may have done a solid job cleaning the training data, it can still be compromised by bad data going forward.

Again, it takes people—lots of them—to find and correct the errors. This in turns subverts the hoped-for productivity gains. Further, as machine learning technologies penetrate organizations, the output of one predictive model will feed the next, and the next, and so on, even crossing company boundaries. The risk is that a minor error at one step will cascade, causing more errors and growing ever larger across an entire process.

These concerns must be met with an aggressive, well-executed quality program, far more involved than required for day-in, day-out business. It requires the leaders of the overall effort to take all of the following five steps.

Step one: clarify

First, clarify your objectives and assess whether you have the right data to support these objectives. Consider a mortgage-origination company that wishes to apply machine learning to its loan process. Should it grant the loan and, if so, under what terms? Possible objectives for using machine learning include:

Lowering the cost of the existing decision process. Since making better decisions is not an objective, existing data may be adequate.

Removing bias from the existing decision process. This bias is almost certainly reflected in its existing data. Proceed with caution.

Improving the decision-making process—granting fewer loans which default and approving previously-rejected loans that will perform. Note that while the company has plenty of data on previously-rejected mortgages, it does not know whether these mortgages would have performed. Proceed with extreme caution.

When the data fall short of the objectives, the best recourse is to find new data, to scale back the objectives, or both.

Step two: set the time needed

Second, build plenty of time to execute data quality fundamentals into your overall project plan. For training, this means four person-months of cleaning for every person-month building the model, as you must measure quality levels, assess sources, de-duplicate, and clean training data, much as you would for any important analysis. For implementations, it is best to eliminate the root causes of error and so minimize ongoing cleaning.

Doing so will have the salutary effect of eliminating hidden data factors, saving you time and money in operations as well. Start this work as soon as possible and at least six months before you wish to let your predictive model loose.

Step three: audit trail

Third, maintain an audit trail as you prepare the training data. Maintain a copy of your original training data, the final data you used in training, and the steps used in getting from the first to the second.

Doing so is simply good practice (though many unwisely skip it), and it may help you make the process improvements you’ll need to use your predictive model in future decisions. Further, it is important to understand the biases and limitations in your model and the audit trail can help you sort it out.

Step four: responsible party

Fourth, charge a specific individual (or team) with responsibility for data quality as you turn your model loose. This person should possess intimate knowledge of the data, including its strengths and weaknesses, and have two foci.

First, day-in and day-out, they set and enforce standards for the quality of incoming data. If the data aren’t good enough, humans must take over. Second, they lead ongoing efforts to find and eliminate root causes of error. This work should already have started and it must continue.

Step five: independent review

Finally, obtain independent, rigorous quality assurance. As used here, quality assurance is the process of ensuring that the quality program provides the desired results. The watchword here is independent, so this work should be carried out by others—an internal QA department, a team from outside the department, or a qualified third party.

Even after taking these five steps, you will certainly find that your data is not perfect. You may be able to accommodate some minor data quality issues in the predictive model, such as a single missing value among the fifteen most important variables.

To explore this area, pair data scientists and your most experienced businesspeople when preparing the data and training the model.

Laura Kornhauser, of Stratyfy, Inc., a start-up focused on bringing transparency and accountability to artificial intelligence, put it this way: “Bring your businesspeople and your data scientists together as soon as possible. Businesspeople, in particular, have dealt with bad data forever, and you need to build their expertise into your predictive model.”

Seem like a lot? It is. But machine learning has incredible power and you need to learn to tap that power. Poor data quality can cause that power to be delayed, denied, or misused, fully justifying every ounce of the effort.

Author: Thomas C. Redman, “the Data Doc,” is president of Data Quality Solutions.