Social credit will shift law in the west “from constitution to analytics and algorithm’

—Penn State professor

Will AI-driven social scores supplant the constitution? Professor Larry Backer of Penn State University writes in a 2018 paper that resistance to social credit systems in the west could be dissolved when the masses are ”socialized…as a collective” and “…the great culture management machinery of Western society develop a narrative in which such activity is naturalized within Western culture.”

The paper discusses ways in which a social credit system could be implemented in the west. Backer writes that the shift in law with this system will ”change the focus of public law from constitution and rule of law to analytics and algorithm.”

In the paper, titled “Next Generation Law: Data Driven Governance and Accountability Based Regulatory Systems in the West, and Social Credit Regimes in China,” Backer describes moves to social credit in the West as ”fractured,” but gives guidance on how societal norms could be steadily pushed to accept this system.

We know what it takes to create liveable communities.

As landlords it’s our business. Afterall, no one likes uncivil, rude, or reckless behavior. So why not track and ban unfavorable resident behavior? How much more efficiently might our businesses run if we were able to banish residents from our properties through an aggregated social score? It’s illegal for good reason.

What a dichotomy that companies such as Uber, Airbnb, Facebook and Google have collected social scores on their customers—and freely ban for life those they deem unacceptable. Airbnb expressly notifies their undesirables: “This decision is irreversible and will affect any duplicated or future accounts. Please understand that we are not obligated to provide an explanation for the action taken against your account.”

Social rule is invasive, secretive and outside the rule of law. The new world of algorithms and automation has given tech companies cover—for now.

The U.S. is a nation of laws and for good reason. The alternative, social rule is a system with no rights and an intentional move to avert due process.

Social credit scores are here

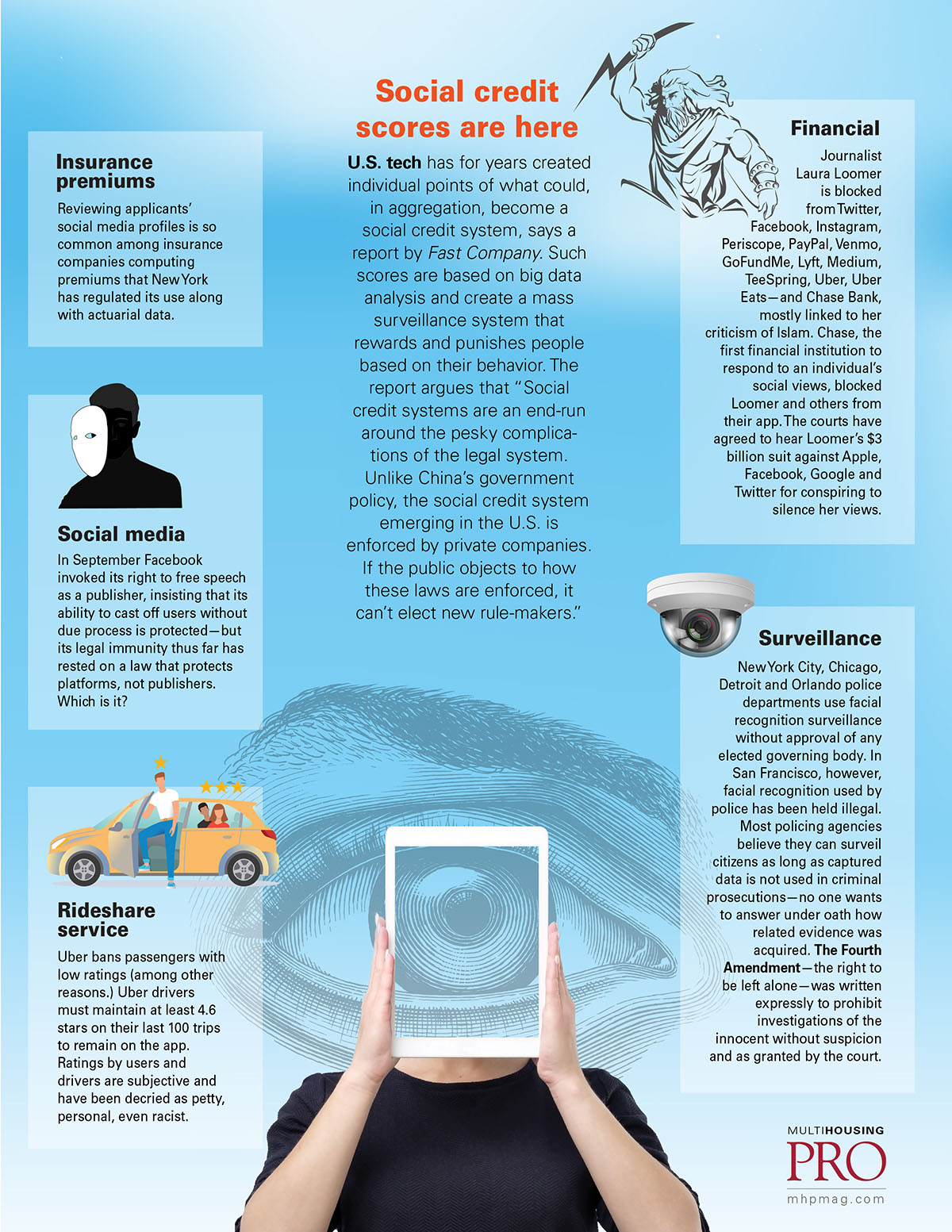

U.S. tech has for years created individual points of what could, in aggregation, become a social credit system, says a report by Fast Company. Such scores are based on big data analysis and create a mass surveillance system that rewards and punishes people based on their behavior. The report argues that “Social credit systems are an end-run around the pesky complications of the legal system. Unlike China’s government policy, the social credit system emerging in the U.S. is enforced by private companies. If the public objects to how these laws are enforced, it can’t elect new rule-makers.”

Insurance premiums

Reviewing applicants’ social media profiles is so common among insurance companies computing premiums that New York has regulated its use along with actuarial data.

Social media

In September Facebook invoked its right to free speech as a publisher, insisting that its ability to cast off users without due process is protected—but its legal immunity thus far has rested on a law that protects platforms, not publishers. Which is it?

Rideshare service

Uber bans passengers with low ratings (among other reasons.) Uber drivers must maintain at least 4.6 stars on their last 100 trips to remain on the app. Ratings by users and drivers are subjective and have been decried as petty, personal, even racist.

Financial Journalist

Laura Loomer is blocked from Twitter, Facebook, Instagram, Periscope, PayPal, Venmo, GoFundMe, Lyft, Medium, TeeSpring, Uber, Uber Eats—and Chase Bank, mostly linked to her criticism of Islam. Chase, the first financial institution to respond to an individual’s social views, blocked Loomer and others from their app. The courts have agreed to hear Loomer’s $3 billion suit against Apple, Facebook, Google and Twitter for conspiring to silence her views.

Surveillance

New York City, Chicago, Detroit and Orlando police departments use facial recognition surveillance without approval of any elected governing body. In San Francisco, however, facial recognition used by police has been held illegal. Most policing agencies believe they can surveil citizens as long as captured data is not used in criminal prosecutions—no one wants to answer under oath how related evidence was acquired. The Fourth Amendment—the right to be left alone—was written expressly to prohibit investigations of the innocent without suspicion and as granted by the court.